Creating Audio on the Web Is Easy—Until It’s Time to Export

For years, advanced audio processing in the browser has been well within reach, thanks to the powerful Web Audio API—a go-to solution for web developers building dynamic and innovative audio applications. But while creating audio has become simpler than ever, exporting it seamlessly remains a surprisingly tricky challenge in certain scenarios.

Problem statement

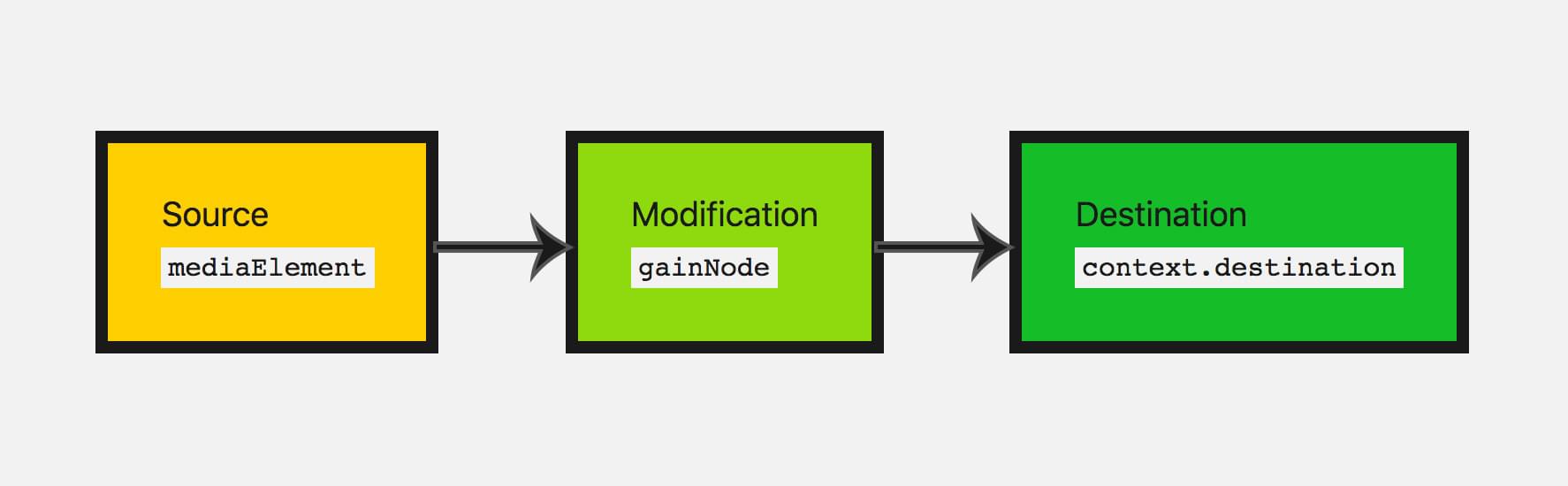

To appreciate why exporting audio is a challenge, it’s essential to understand what the Web Audio API enables developers to accomplish.

Today, the browser is capable of handling far more advanced media operations than it could just a few years ago. Since 2017, cross-browser support for WebAssembly has been available and continually improving. Similarly, WebCodecs, introduced in 2021, has expanded browser functionality significantly. Naturally, developers and companies are bringing previously unimaginable applications to the browser, like the whole Photoshop, DAWs, and video editors.

One of the most exciting applications is bringing full-fledged Digital Audio Workstations (DAWs) to the browser. By focusing on DAWs in this article, we’ll explore both the power of the Audio API and the limitations developers face when attempting to export processed audio.

As a basic example, just in a few lines of code an audio graph can easily be set up with a delay, and a linear ramp-up gain.

const audioContext = new AudioContext();

const audioEl = new Audio();

audioEl.src = src;

const source = audioContext.createMediaElementSource(el);

const delayNode = audioContext.createDelay(0.1 + i);

const gainNode = audioContext.createGain();

gainNode.gain.setValueAtTime(0, audioContext.currentTime);

gainNode.gain.linearRampToValueAtTime(1, audioContext.currentTime + 0.1);

source.connect(delayNode).connect(gainNode).connect(audioContext.destination);For more advance use cases, it only takes a few more lines of code to split channels and do different tasks on each channel, apply a compressor or perform a stereo pan for a surround effect, for example.

Export the manipulated audio

Naturally, after doing a decent amount of editing, the user might want to export the product. The problems starts here.

The problem lies in the fact that AudioContext processes audio in real time. This means that editing or exporting a 60-minute audio file will take the same amount of time—60 minutes—to process. For users, this is a sluggish and unreasonable experience.

const audioContext = new window.AudioContext();

const audioElement = document.querySelector('audio');

const track = audioContext.createMediaElementSource(audioElement);To solve this issue, OfflineAudioContext was born, which is not bound to realtime and processes as fast as possible. OfflineAudioContext is a variant of AudioContext designed for non-realtime processing. It allows developers to process audio faster than real time, taking a large chunk of time off workflows like rendering or exporting.

Many developers might reasonably assume that they can switch from AudioContext to OfflineAudioContext and use createMediaElementSource() for exporting data. However, this particular method was never supported in OfflineAudioContext. In fact, it was explicitly removed from the Web Audio API specification over a decade ago, with explanation provided in 2018—when implementers stated that support is unlikely to happen anytime soon due to fundamental differences in how media elements and offline contexts operate.

const offlineContext = new OfflineAudioContext(channels, length, sampleRate);

// Developer might assume this will work...

const offlineTrack = offlineContext.createMediaElementSource(audioElement); // Error!BaseAudioContext.decodeAudioData

While developers can’t use createMediaElementSource with OfflineAudioContext, there’s another method that provides a viable alternative for processing audio offline; the BaseAudioContext.decodeAudioData method. BaseAudioContext.decodeAudioData is a method that takes an audio file (in the form of an ArrayBuffer) and decodes it into audio data that can be directly used in the Web Audio API. This provides a way to efficiently load and manipulate audio without relying on streaming playback. According to MDN documentation, this is the preferred method for creating an audio source in the Web Audio API from an audio file.

Using the decoded audio as a source is trivial and the audio graph used for playback can be reused.

const fileBuffer = await file.arrayBuffer();

const pcm = await offlineContext.decodeAudioData(fileBuffer);

const source = offlineContext.createBufferSource();

source.buffer = pcm;

const merger = audioContext.createChannelMerger(2);

const splitter = audioContext.createChannelSplitter(2);

const delayNode = audioContext.createDelay(0.1 + i);

const gainNode = audioContext.createGain();

gainNode.gain.setValueAtTime(0, audioContext.currentTime);

gainNode.gain.linearRampToValueAtTime(1, audioContext.currentTime + 0.1);

source.connect(merger).connect(splitter).connect(delayNode).connect(gainNode).connect(offlineContext.destination); The resulting PCM audio can be exported to a wav file by adding a couple headers. For instance, a .wav file requires additional information—such as metadata about the audio’s sample rate, bit depth, and number of channels—stored in headers that accompany the raw PCM audio data

The first limitation is that this method only works on complete file data, not fragments of audio file data. In practice, this means the entire source audio file must be fully decoded and loaded into memory upfront. This is already a dealbreaker in scenarios where the complete file cannot be preloaded—for example, while processing streamed audio data.

But there’s more: when working with the Web Audio API’s decodeAudioData function, developers are unable to access audio samples as they are decoded. Instead, the function completes only after the entire source has been fully decoded. For large audio files, this can quickly lead to serious memory usage concerns.

Consider a common scenario: a single stereo audio file of professional quality (32-bit, 44.1 kHz) and 60 minutes in length would require 1212MB of memory to store—over 1 GB for just one file. For users who need to sample or merge from dozens of such source files, this approach becomes untenable. Memory-related crashes and severe performance issues are inevitable.

To make matters worse, the Web Audio API’s AudioBuffer object cannot be appended. In other words, even if developers attempt to decode audio data on-the-fly, they cannot use it to dynamically feed an audio graph. This limitation makes it impractical to process or export audio incrementally.

Available Solutions

Clearly, browser-based audio editing scenarios have not been a top priority for API designers. Similar challenges appear in the context of video editing, where in-browser workflows often fall short of providing editing and export capabilities. When it comes to audio, efficiently exporting manipulated or processed files is no small feat.

To my knowledge, there is currently no straightforward or native way in the browser to export manipulated audio without introducing significant memory overhead, especially for longer or more complex audio projects. Developers must contend with browser limitations, which require creative and sometimes resource-intensive workarounds.

Backend heavy lifting

At this point, the simplest solution to tackle the limitations of OfflineAudioContext is to fall back on a traditional backend-heavy approach. This involves performing editing and previews in the browser via the Web Audio API, then offloading the export process to the backend. A backend server can process the source files using tools like FFmpeg to generate the final exported file, which is then sent back to the user. While the method of transmitting audio data between the frontend and backend can vary in complexity, this type of pipeline is generally considered a standard task for a full-stack engineer.

However, there are some downsides to this approach. One major inconvenience is the need to implement the same audio pipeline twice: once in the browser for real-time previews using the Web Audio API, and again on the backend for exporting with a suitable tool like FFmpeg.

Beyond the redundant implementations, there’s a risk of producing inconsistencies between the browser preview and the final exported file. Such mismatches can arise because Web Audio API and FFmpeg handle audio processing differently. Differences in buffer handling, sampling rate conversion, and interpolation algorithms can result in minor discrepancies between what the user hears in the browser and what is exported. While this may not matter for casual use cases, professional studio-grade applications can be highly sensitive to such inaccuracies—where even a millisecond or slight tonal differences in exported audio can disrupt workflows.

Additionally, while FFmpeg offers numerous advantages—such as broad format support, efficient file encoding, and advanced audio manipulation—it operates independently of Web Audio API’s processing pipeline. This could make it difficult to achieve one-to-one parity in sound processing between the two systems.

Finally, it’s important to consider scalability. When deployed in applications with high user volumes, relying on backend-heavy processing could result in server bottlenecks or increased infrastructure costs, especially when exporting large or complex audio projects.

Additionally, the absence of offline exporting capabilities makes this approach inadequate for users working in environments with unreliable or no internet access.

WebAssembly

So far, we’ve been focused on exploring trade-offs. One can easily use Web Audio for DAW scenarios and export with it, as long as the source files are small. The Web Audio API is a great tool for working with small media files—it’s lightweight and well-suited for tasks like playback and real-time effects. However, its real-time nature and limited exporting capabilities make it impractical for larger projects with export needs. But isn't there a no-compromise solution?

The closest option to a perfect user experience is via WebAssembly (WASM).

ffmpeg.wasm

One alternative for exporting audio in the browser is to use ffmpeg.wasm. This is a WebAssembly (WASM) build of the FFmpeg library, which allows performing audio and video processing directly in the browser. FFmpeg can handle tasks like compression, transcoding, and exporting with ease.

To mitigate memory issues, files can be mounted onto WebAssembly’s in-memory filesystem. This approach allows the WebAssembly module to access file data directly via the browser’s File API, eliminating the need to load the entire file into WebAssembly’s memory space. Here’s an example of how that might work in practice:

await ffmpeg.createDir(inputDir);

await ffmpeg.mount('WORKERFS', {

files: [ file ],

}, inputDir);The output file generated by ffmpeg.wasm will reside in WebAssembly’s in-memory filesystem after execution, meaning no file will be written to disk by default. As long as this is a compressed, small-size file (e.g., an MP3 that’s not hours long), this limitation likely won’t be an issue. However, when working with uncompressed PCM audio or very large audio files, it is very possible to encounter memory constraints. I haven’t yet looked into whether ffmpeg.wasm provides a hook or pre-built mechanism for reading chunks of data as they would be written. However, by intercepting the write method in the WASM filesystem, it should be possible to offload data incrementally to persistent storage, such as the Origin Private File System (OPFS). This would provide a scalable way to handle larger files or progressively save audio data.

On the down-sides, the same arguments against using different solutions for audio preview and export still apply. Depending on the use case, this mismatch could either be insignificant or problematic.

Custom implementation in WASM

From the perspective of the end-user experience, is it possible to have it all? Imagine a solution that is not only resource-efficient and capable of offline processing but also guarantees a ‘what-you-hear-is-what-you-get’ experience. The answer is almost yes—but achieving this requires a paradigm shift.

Traditional browser-based methods often rely on a mix of specific web APIs and libraries, which can introduce limitations when performance and precision are critical. Instead, why not leverage the power of native applications—proven solutions that have delivered exceptional performance for decades? With WebAssembly (WASM), developers can bring these native-like capabilities directly to the browser.

Thousands of native applications in the past have already solved demanding challenges like audio editing and exporting. By compiling these powerful tools into WASM and integrating them into web apps, offline support, efficient resource consumption, and a precise ‘what-you-hear-is-what-you-get’ workflow can be achieved —all in the browser.

For developers looking to overcome the limitations of the Web Audio API, FFmpeg provides a powerful alternative. It enables access to a vast library of filters, processing features, and support for nearly every audio format imaginable—far beyond what Web Audio offers out of the box. Some excellent open-source libraries even demonstrate how to build a lightweight C++ layer around FFmpeg’s core functionality, bringing its capabilities into the browser environment.

However, with great power comes great responsibility. Unlike working with higher-level APIs such as Web Audio, using FFmpeg places nearly all control—and the associated challenges—into the developer’s hands. This means determining how to read audio files, when to open and close codecs, whether to process audio streams eagerly or lazily, and implementing robust threading and memory-management strategies to avoid performance bottlenecks or crashes.

Exporting a processed file also becomes a manual task: developers must orchestrate the various processing steps and handle the details of file writing themselves. These responsibilities extend well beyond the traditional skill set of web developers, who may be more familiar with JavaScript-based frameworks and browser APIs than with the intricacies of C development and low-level audio engineering.

Furthermore, while it might seem like WebAssembly was designed to handle tasks like this, integrating solution with Web Audio is not entirely seamless—but that’s a discussion for another article.

TL;DR

There isn’t a one-size-fits-all solution for exporting manipulated audio in the browser. Every method has its own trade-offs, balancing complexity, memory usage, and the specific requirements of the application. Here’s a summary of the available options.

Web Audio API only

- Pros: Extremely simple to implement and requires no additional libraries or backend. Go-to solution for any multimedia application without exporting needs.

- Cons: All decoded source files remain in memory, which can lead to performance issues. Exporting options are suitable only for applications where source files are guaranteed to be small.

Web Audio API + backend FFmpeg

- Pros: Relatively easy to set up using FFmpeg on a server for exporting processed audio.

- Cons: Uses separate stacks for previewing and exporting audio, which may cause inconsistencies or difficulties in advanced use cases. Lacks offline support. Might introduce significant server costs.

Web Audio API + client-side FFmpeg

- Pros: Also relatively easy and performs all operations in the browser, allowing for offline compatibility. No server costs.

- Cons: Like the backend approach, it uses a different stack for previewing and exporting, potentially causing issues with sync or consistency in complex scenarios.

Custom WASM implementation

- Pros: Provides the most consistent experience by using the same stack for both previewing and exporting audio. Leverages highly mature, battle-tested libraries like FFmpeg for advanced multimedia editing.

- Cons: Highly complex and requires expertise in systems programming languages like C or Rust, as well as a deep understanding of FFmpeg and WASM integration.

- Best for: Advanced use cases and applications requiring both performance and flexibility.

Offering developers an option to dynamically feed an AudioBufferSource or to support MediaElementSource in OfflineAudioContext would effectively solve this problem. This small addition to the Web Audio API could provide a straightforward way to bridge the gap between real-time and offline contexts, simplifying workflows and enabling smooth exporting of edited audio. Such a change would not only make this article shorter but also eliminate a roadblock that has been a notable pain point for many multimedia applications.